I'm Nick DiMucci, founder and head developer of MindShaft Games. This is a blog mostly about game development with some software engineering sprinkled in.

Monday, November 17, 2014

Yet Another Addendum: 2D Platformer Collision Detection in Unity

You can see the bug in the video below. Just fast forward to the 1:07 mark and watch the Angel in the lower right corner.

It actually took me a long time to come up with a fix for this, even though now it's such a simple and obvious fix. Without reiterating all of the details of the collision system, I essentially cast rays towards the directions the player is moving. If the player is moving left, I cast evenly spaced horizontal rays from the box collider (4 in this game's case). To cover the corner of the box collider, I use a margin variable to cast ever so slightly out of the box collider bounds; refer to my previous posts on this for further detail.

This was causing the snagging issue because while the player's box collider wasn't actually colliding the tile (and it doesn't really appear to be either in the game), a collision was still being detected due to the margin. By itself, this isn't too bad, but we'd also get a y-axis collision detected, and this, along with a x-axis collision, would cause the snagging/jittering effect.

The ideal solution is to reduce the margin of the raycasts so that we're not casting outside of the box collider, and still guarding against corner collisions. Thus, we introduce diagonal raycasts from the corners of the box collider!

Here is the new code in all its glory.

Note the Move method and when we perform the diagonal raycasts. We only want to perform them when the player is moving through the air, by checking that the player is moving in both x and y axes, not in a a side collision nor on the ground. We then perform a simple raycast (always with the origin in the center of the collider) in the direction the player is moving. When a corner is hit, we simply stop the x-axis movement.

A simple solution to a problem that was haunting me for a while.

Wednesday, October 15, 2014

Duke Nukem 3D - Game Tutorial Through Level Design

Before we dive in, we need to remember that Duke Nukem 3D was released almost 19 years ago (damn, we're all getting old :( ). The first person shooter genre was just being born out of prior games such as Wolfenstein 3D and Doom. Duke Nukem 3D, at the time, was a large leap forward in the genre, offering true 3D play as players could traverse the Y-axis through jumping and jetpacking and incredibly expansive, detailed levels and interactivity. Duke3d needed to let the player know this isn't Doom they were playing!

We're going to walk through just the first area of Hollywood Holocaust, and how the level design is used to teach the player about the new mechanics available to him, both as a player who's played Doom, and a player new to the FPS genre entirely.

The game starts with Duke jumping out of his ride (damn those alien bastards!). Immediately, Duke is airborne. He doesn't start grounded, letting gravity pull him down the Y-axis. This immediately tells the player that there's a whole new axis of gameplay available to you. You will not be zipping around just the X and Z axes. This is further emphasized by the fact that you land on a caged in roof top. There's only one place to go but down!

The player is left to roam the enclosed rooftop. The rooftop is seemingly bare at first, but rewards the player for exploring beyond the obvious path with some additional ammo hidden behind the large crate. Exploration and hidden areas is a large part of Duke3d's gameplay, and this is a subtle, yet effective way of communicating that to the player.

Next, the player will come across a large vent fan, taped off, with some explosive barrels conveniently placed next to it. The game literally cannot continue until the player figures out the core mechanic of the game, shooting. Not only is the mechanic of shooting being taught, but also the mechanic of aiming at your target. This is all done at a leisurely, comfortable pace for the player. Imagine if that there was an enemy guarding the air vent? For a player new to the genre (and back in 1996, it was very common to have someone play this game who's never played a FPS before, not even Doom), it would have been very overwhelming and probably a guaranteed player death.

Once the player figures out aiming and shooting, they also are taught another core mechanic of the game, puzzle solving. Solving little environmental based puzzles will be common going forward, so the player needs to be taught to be aware of their surroundings and understand it is interactive and interactivity will be key to success.

It's fascinating to me that the core of the game is taught to the player in such little time, with such seemingly simple level design.

Thursday, August 28, 2014

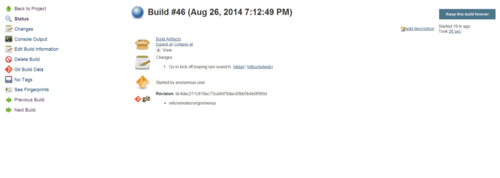

Using Jenkins with Unity

Going off my last post where I used a batch script to automate Unity builds, I decided to take it a step further and integrate Jenkins, the popular CI sofware, into the process.

With Jenkins, I can have it poll the Demons with Shotguns git repository, and have it detect when changes are made, in which it'll perform a Unity build (building the Windows target) and archive the build artifacts.

What's great about this is that I can clearly see which committed changes relate to which build, helping me identify when, and more importantly where, a new bug was introduced.

I currently have it set to keep a backlog of 30 builds, but you can potentially keep an infinite number of builds (limited to your hard drive space, of course).

So how do you configure this? Assuming you have Jenkins (and the required source control plugin) installed already, create a new job as a free-style software project. In the job configuration page, set the max # of builds to keep (leave blank if you don't want to limit this). In the source code management section, set it up accordingly to whichever source control software you use (I'm using git). This section, and how you set it up, is going to vary greatly depending on which source control software you use.

Under build triggers, do Poll SCM and set the appropriate cron job syntax based on how frequently you want to poll the source repository for changes.

Under the build section, add a Execute Windows batch command build step. You then script up which targets you want to build (you can use the script in my previous post as a template).

Under post-build actions, add Archive the artifacts. In the files to archive text box, setup the fileset masks you want. For a standalone build it would look like "game.exe,game_Data/**".

That's it! I do know that there is a Unity plugin for Jenkins that'll help run the BuildPipeline without having to write a batch script but I never had success in getting it running so I just went this route.

Automating Unity Builds

I wanted a way to automate building Unity projects from a command line, but to also commit each build to a git repo so I can keep track of the builds I make (in case something breaks, I can go back to previous builds and see where it might of broken). This is my poor man's CI process.

Here's the script in all its glory.

As you can see, it's nothing special. Simply plug in the path to your project and where you want to the exe to spit out. Adding other build targets is trivial as well. Best part of this, it doesn't require the Pro version of Unity at all!

This solution is temporary. I'm going to wrap this in Jenkins so that it'll detect git commits then build and archive the game's .exe. More on that soon!

Monday, July 7, 2014

How to program independent games by Jonathan Blow - A TL;DR

This is a talk about productivity, about getting things done, more than anything. Programmers that get their computer science degree are often taught how to optimize code, but this generally comes at a great expense to productivity. Indie game devs have to wear several hats (if not all of the hats), so time is too precious to be wasted.

There are several examples Blow goes through to illustrate this, some of which I think are very weak arguments given modern APIs (I'm referring to his hash table vs. arrays argument), but the majority of them are spot on. One that stood out in particular is the urge to make everything generic, when it may not be necessary. More often than not, a method you're writing will be a one off, only used to perform some type of action on one type of object, so time is absolutely wasted trying to make that method work on an entire hierarchy of objects.

The biggest take away is simply this: the simplest solution to implement is almost always the correct one. Get it done. Move on. Fix it/optimize it only when you absolutely need to.

Monday, April 21, 2014

Creating a flexible audio system in Unity

Playing only one audio clip at a time can be a problem in scenarios where you have an AudioSource attached to a prefab, your Player for example, and you have multiple audio clips you'd like to be played in succession. Your player jumps, so you want to play a jumping sound effect, but within the time that jumping sound effect is playing, they get hit by something, so you swap out the audio clip and play a player hit sound effect, but if you're trying to use a single AudioSource, that'll cut off the currently playing jumping sound effect. It'll sound bad, jarring and confusing to the player. Most obvious solution is to simply attach a new audio source for every audio clip you'd like to play. That may get nightmarish if you end up having a lot of possible audio clips to play.

My solution has been to create a central controller that'll listen for game events to spawn and pool AudioSource game objects in the scene at a specified location (in case the audio clip is a 3D sound), load it with a specified AudioClip, and play it, and return the instance back to the object pool for later use. This allows you to play multiple audio clips at a single location, at a single time, without cutting each other off. You also get the benefit of keeping your game prefabs clean and tidy.

I'm always reluctant to share my code because I use StrangeIoC, which not everyone is using (though you probably should!) and the code structure may seem alien, but a keen developer should be able to adapt the solution to their needs. Let's go through a working example.

I've attempted to comment this Gist well enough so that people who aren't familiar with StrangeIoC can still follow along. The basic execution is

- Player is hit, dispatch a request to play the "player is hit" sound effect

- This is a fatality event, dispatch a request to also play the "player fatality" sound effect

- PlaySoundFxCommand receives both events

- For each separate event, attempt to obtain an audio source prefab from the object pool. If one is not available, it will be instantiated

- If the _soundFxs Dictionary doesn't already have a reference to the requested AudioClip, load it via Resources.Load and store reference for future calls

- Setup the AudioSource (assign AudioClip to play, position, etc)

- Play the AudioSource

- Start a Coroutine to iterate every frame while the AudioClip is still playing

- Once the AudioClip is done, deactivate the AudioSource and return it back to the object pool

Monday, April 14, 2014

Thursday, March 27, 2014

Reducing Your Game's Scale: Save It For The Sequel!

Friday, March 21, 2014

The Importance of Player Feedback & Subgoals: Playtest Results - 03/14/2014

Here is a clip from one of the recordings I took.

Importance of Feedback

My biggest initial take away is the importance of player feedback for even the smallest actions. From jumping to obtaining a frag, there needs to be feedback provided. The player not only needs to be given feedback to assure his actions are executed, but to be rewarded for the things he does and make them worth doing again. Feedback can be provided in numerous ways, from elegant sprite animations, to subtle or acute particle effects. A small, brief dramatic sequence to a frag can make the frag all the more rewarding, thrilling and special as so awesomely done in Samurai Gunn. |

| A player swats back another players bullet for a kill in Samurai Gunn. |

|

| Fragging a demon with a shotgun in Overtime |

Importance of Subgoals

Currently, Overtime has only one goal, kill all other players. There is very little else the player needs to focus on or worry about. This is a problem, as the game gets boring quickly. Once you've killed the other players a handful of times, you've experienced all that there is to offer and lose interest in playing any further.It could be argued that platforming (successfully negotiating jumps to make your desired mark) and ammo management (collecting ammo packs to ensure you always have ammo) are also subgoals, but I feel they are too subtle. This just may be the nature of simple deathmatch mode in general; I do plan to add other game modes which will add more exciting subgoals for the player I'm sure.

Samurai Gunn has environmental hazards and destructibles. This give players more subgoals, avoid accidental deaths and shape your environment to your advantage (you can destroy certain tiles to the point where they become hazards). Players in Samurai Gunn can also engage in defensive actions, engaging in mini sword fights to parry player attacks and swatting back player bullets. This not only gives players a grander sense of control over their ultimate fate, but an entirely different set of actions and required skills.

This was a great round of playtesting and really highlighted serious gaps in Overtime's design, which I'll need to address. The above GIF of Samurai Gunn does such an incredible job of summing up the entire game, its mechanics, the level of polish and feedback, goals and dimensions in just under a second of gameplay. If you need longer than a second to capture the total essence of your game, you should step back and start rethinking your design.

Wednesday, March 19, 2014

Friday, March 7, 2014

Addendum: 2D Platformer Collision Detection in Unity

NOTE: Please see Yet Another Addendum to this solution for important bug fixes.

This is an addendum to my original post, 2D Platformer Collision Detection in Unity. The solution explained in that post was a great "start", but ultimately had problems which I'd like to go over and correct in this post, so that I don't lead anyone too astray!

The Problem

The Solution

|

| Source: Gamasutra: The hobbyist coder #1: 2D platformer controller by Yoann Pignole |

Tuesday, January 14, 2014

Single Camera System For Four Players

Why not have both? It's pretty trivial to have maps that anchor the camera to a single spot for small maps, while allowing split-screen cameras for larger maps. After some playtesting, I found the split-screen cameras pretty annoying due to the small screen real estate they provided for each player. I didn't want to scrap the idea of big maps entirely. So, is it possible to create a single camera that can follow up to four different targets? I soon realized that the type of camera I ended up needing is a camera in the style of a fighting game.

Fighting games, such as Super Smash Bros. or even wrestling games, feature single screen cameras that track multiple targets, zooming in and out as the targets get closer and farther away from each other, respectively. This is done by some vector math magic (it's not really magic, as you'll see).

So let's go through the requirements of the camera system

- Follow up to four targets, always having them within screen view at all times.

- The camera should always be focused on the relative center of all four targets.

- As targets move farther away from each other, zoom camera out an appropriate amount of distance.

- As targets move closer to each other, zoom camera in, clamping the zoom factor to a specified amount.

- Based on all targets current positions, what are the minimum and maximum positions.

- What is the center point between the minimum and maximum positions.

- How far do we need to zoom to keep all targets within view.

Let's add some diagrams to help visualize this better (the scale is all wrong, I know, but bare with me!).

To find the center of these two positions is a trivial step. Simply add the Min and Max vectors, and multiply by 0.5 (favor multiplication over division for performance reasons).

((8, 7) + (31, 14)) * 0.5 = (19.5, 10.5)

Great! We now have the target position that our camera will use to follow. This position will update as our players move, ensuring we're always at the relative center of them. But we're not done just yet. We need to determine the zoom factor.

Quick side note about the zoom factor. When developing a 2D game, you normally use 2D vectors (as we've been doing so far) and an orthographic camera, which ignores the z-axis (in Unity, not really, but the depth is used differently as objects don't change size as the z-axis changes). If you were developing a 3D game, you'd be using 3D Vectors and a perspective camera. Perspective cameras have depth according to their z-axis position. However, determining the zoom factor for both 2D and 3D is quite similar, just how you apply the value differs.

We've already determined that the X and Y coordinates of our camera needs to be (19.5, 10.5), as that's the relative center of all targets on the X and Y axes. What you need now is a vector that's perpendicular to the X and Y coordinates we calculated above. That's where the cross product formula comes in. The more astute reader may be screaming "you can't perform cross product on 2D vectors!" right now. Yes, you're absolutely correct, but bear with me.

The cross product of two vectors give us a vector that's perpendicular (at a right angle) to the two.

|

| Source: Wikipedia |

The diagram above shows the cross product of the red and blue vectors as the red vector changes direction, with the resulting perpendicular green vector. Notice how the magnitude of the green vector changes, getting longer and shorter based on the magnitude of the red vector. This is exactly what we need, a vector that's perpendicular to our camera's (X, Y) target position coordinates, whose magnitude changes appropriately based on the angle.

As mentioned before, you can't perform the cross product of 2D vectors. So instead, we'll pad our 2D vectors with a z coordinate of 0.

(19.5, 10.5, 0) x (0, 1, 0) = (0, 0, 19.5)

x is the symbol for cross product. We use a normalized up vector as our second argument so that the resulting vector is of maximum distance. Using the Z value of 19.5, we can now set the zoom factor. Since orthographic cameras don't technically zoom in the same sense as a perspective camera, we instead change the orthographic size, which provides the same effect.

Now let's assume that the perspective camera of your 3D game needs to act very much like a 2D platformer (always facing the side, never directly above or below). Instead of altering the orthographic size (because that doesn't make sense for a perspective camera ;) ), we use the results of the cross product to set the z-axis directly. This will move the perspective camera accordingly, give us our desired zoom effect.

Here's a video demonstrating the camera movement for two players.